Robust medical image segmentation from non-expert annotations with tri-network

Abstract

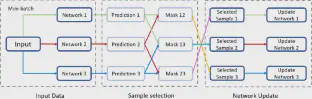

Deep convolutional neural networks (CNNs) have achieved commendable results on a variety of medical image segmentation tasks. However, CNNs usually require a large amount of training samples with accurate annotations, which are extremely difficult and expensive to obtain in medical image analysis field. In practice, we notice that the junior trainees after training can label medical images in some medical image segmentation applications. These non-expert annotations are more easily accessible and can be regarded as a source of weak annotation to guide network learning. In this paper, we propose a novel Tri-network learning framework to alleviate the problem of insufficient accurate annotations in medical segmentation tasks by utilizing the non-expert annotations. To be specific, we maintain three networks in our framework, and each pair of networks alternatively select informative samples for the third network learning, according to the consensus and difference between their predictions. The three networks are jointly optimized in such a collaborative manner. We evaluated our method on real and simulated non-expert annotated datasets. The experiment results show that our method effectively mines informative information from the non-expert annotations for improved segmentation performance and outperforms other competing methods.